EE511 - Advanced Introduction to Machine Learning - Spring Quarter, 2020 - Spring Quarter, 2020

This page is located at https://people.ece.uw.edu/bilmes/classes/ee511/ee511_spring_2020/.

Instructor:

Prof. Jeff A. Bilmes --- Email meOffice: 418 EE/CS Bldg., +1 206 221 5236

Office hours: Thursdays 10pm-12am, zoom online)

TA

Ricky Zhang Office: EEB-417Time/Location

Class is held: Mo/We 10:30-12:30 via Zoom.Announcements

- Sticky: All of the announcements in our class are going to be posted on canvas, and can be found at this link.

Machine Learning

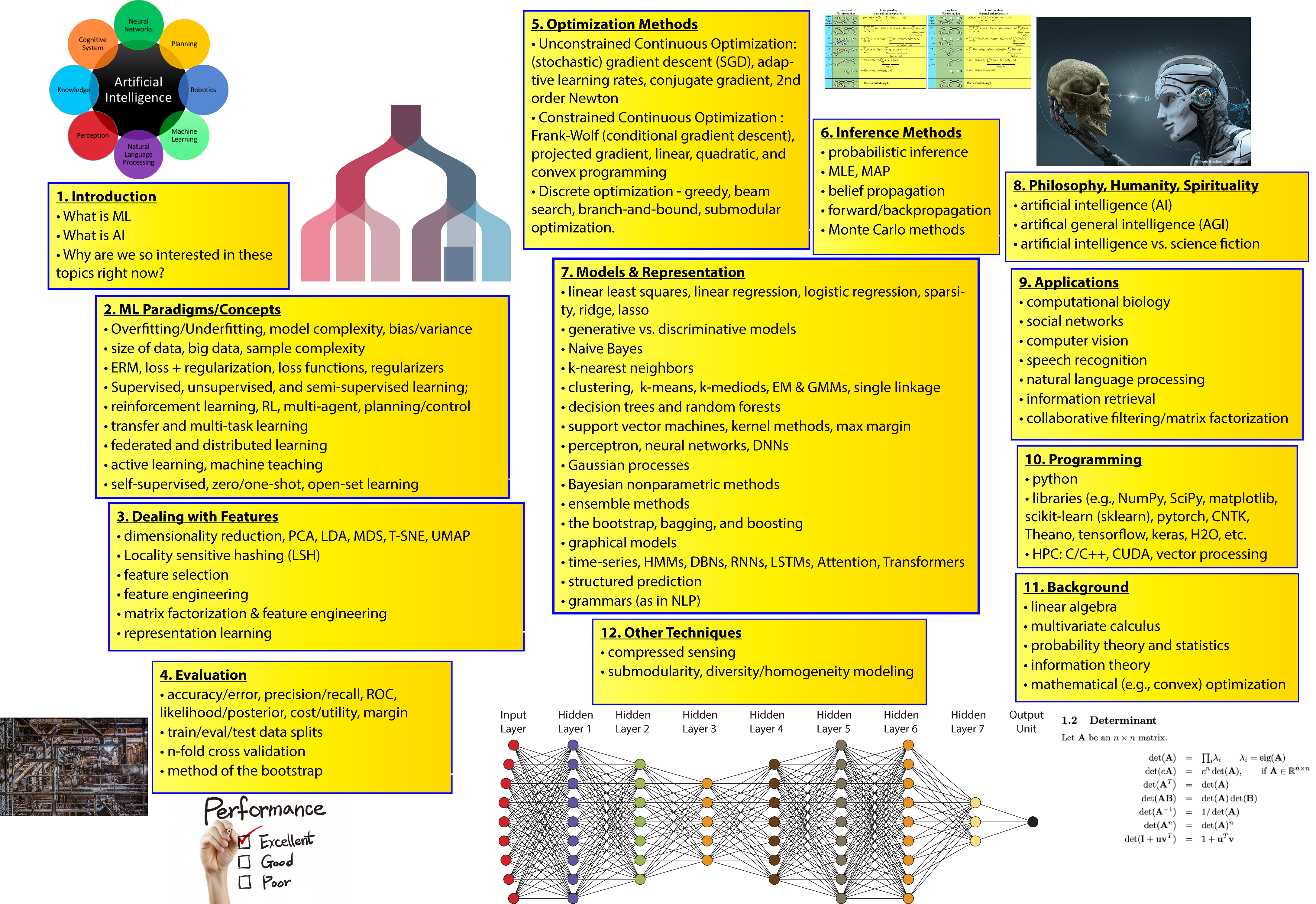

Description: This course will be a rapid 10 week advanced introduction to machine learning. This is an ambitious class that will provide a broad overview of a large variety of machine learning methods in a short amount of time. You will learn to understand the basics of: linear regression; logistic regression; k-nearest neighbors; PCA, LDA, and dimensionality reduction methods; feature selection and engineering; cross validation; the bootstrap, bagging, and boosting; decision trees and random forests; naive Bayes; generative vs. discriminative models; support vector machines and kernel methods; neural networks; Bayesian nonparametric methods; clustering; ensemble methods; reinforcement learning; representation learning; information theory; Gaussian processes; supervised, unsupervised, and semi-supervised learning; graphical models; sparsity and compressed sensing; planning and control; information retrieval; structured prediction; matrix factorization; Monte Carlo methods; time-series analysis and HMMs; multi-agent learning; transfer and multi-task learning; active learning; submodularity; and machine teaching. Along the way, we will motivate the above using applications in computational biology, networks, computer vision, speech recognition, and natural language processing. We will also touch on the philosophy of machine learning and artificial intelligence, and discuss if we can build a computer program having artificial general intelligence. The class will require programming in python and the use of python libraries (e.g., numpy, sklearn, and pytorch). Previous knowledge of linear algebra, calculus, and basic probability theory and statistics is a must.

The following image, giving a class overview, attempts to fix the fact that the above list is long, disorganized, and not very useful.

Syllabus: see the slides from lecure 1.

Homework

Homework is announced, handed out, discussed, and must be done and submitted electronically entirely using Canvas via the following link https://canvas.uw.edu/courses/1372141/assignments.Lecture Slides

Lecture slides will be made available as they are being prepared --- they will probably appear soon before a given lecture, and they will be in PDF format (original source is latex). Note, that these slides are corrected after the lecture (and might also include some additional discussion we had during lecture). If you find bugs/typos in these slides, please email me.| Week. # | Slides | Post Lecture Slides | Lecture Dates | Contents |

| 1 | 3/30/20, 4/1/20 | What is ML, Probability, Uncertainty, Gaussians, Linear Regression, Associative Memories, Supervised Learning, Batch vs. Online Gradient Descent. | ||

| 2 | pdf and pdf | 4/6-8/20 | On Underfitting and Overfitting (also see writeup on this), Classification, Logistic Regression, Complexity and the Bias/Variance Tradeoff | |

| 3 | pdf and pdf | 4/13-15/20 | More Bias/Variance, Regularization, Ridge Regression, Cross Validation, Multiclass Classification | |

| 4 | 4/20-22/20 | Empirical Risk Minimization (ERM), Generative vs. Discriminative Modeling, \Naive{} Bayes, Start Lasso | ||

| 5 | pdf and pdf | 5/4-6/20 | More Lasso, Regularizers, Curse of Dimensionality, Dimensionality Reduction | |

| 6 | pdf and pdf | 5/11-13/20 | More Curse of Dimensionality and Dimensionality Reduction (PCA, LDA, Random projections, auto-encoders, tSNE), k-NN | |

| 7 | pdf and pdf | 5/11-13/20 | k-NN, Universal Consistency, LSH, Decision Trees. | |

| 8 | pdf and pdf | 5/18-20/20 | More DTs, Bootstrap/Bagging, Boosting \& Random Forests, GBDTs, Graphs | |

| 9 | 5/27/20,6/1/20 | Graphical Models (Factorization, Inference, MRFs, BNs); Learning Paradigms; Clustering; | ||

| 10 | pdf and pdf | 6/3-5/20 | EM Algorithm; Spectral Clustering, Graph Semi-supervised Learning, Deep models, (SVMs, RL); No Free Lunch, Learning Paradigms, The Future. | |

| Week. # | Slides | Post Lecture Slides | Lecture Dates | Contents |

Discussion Board

You can post questions, discussion topics, or general information at this link.

Relevant Books

There are many books available that discuss some the material that we are covering in this course. See the end of the lecture slides for books/papers that are relevant to each specific lecture, and see lecture1.pdf for a description of our book (Cover and Thomas) and other books/papers relevant to this class.

- Writeup on underfitting, overfitting, validation curves, and learning curves.

- See lecture 1 slides for our text and other relevant texts.

Important Dates/Exceptions

Religious Accommodations

- Washington state law requires that UW develop a policy for accommodation of student absences or significant hardship due to reasons of faith or conscience, or for organized religious activities. The UW's policy, including more information about how to request an accommodation, is available at Religious Accommodations Policy (https://registrar.washington.edu/staffandfaculty/religious-accommodations-policy/). Accommodations must be requested within the first two weeks of this course using the Religious Accommodations Request form (https://registrar.washington.edu/students/religious-accommodations-request/)