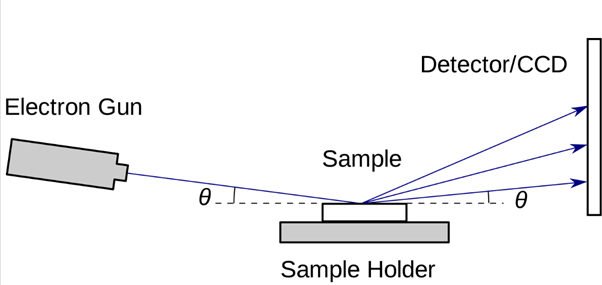

RHEED Overview

RHEED is an imaging technique used in Materials Science

for studying the structure of crystals.

In RHEED, a beam of high-energy electrons is directed

at the sample surface at a low angle.

The electrons interact with the atoms in the

surface layers of the sample,

and their scattering pattern provides information about the

surface structure, including its symmetry, roughness, and atomic arrangement.

RHEED System

Problem Statement

Currently available systems which process RHEED data to analyze material properties, such as crystal structure, can only perform inference at sub-second cycle times due to the long processing times involved. This limits the control possible over the crystal growth process. Faster capture and monitoring of crystal growth processes can help illuminate the physics behind such processes. These systems are also expensive due to proprietary hardware and software sold by a small number of companies.

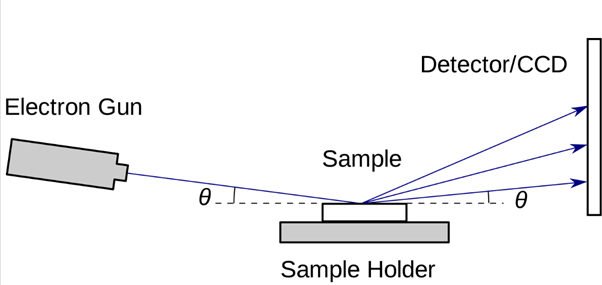

The solution is to have a high-speed camera focused on the RHEED diffraction pattern, in combination with an onboard FPGA to enable real-time, hardware-accelerated machine-learning inference of the incoming images. A frame grabber board from Euresys with an onboard FPGA is utilized to interface the camera with user-designable custom logic.

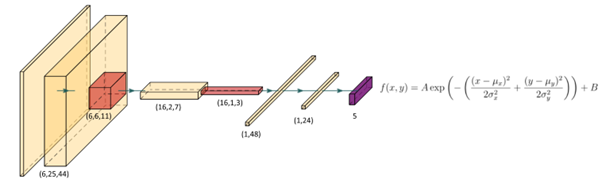

In this case, the custom logic is a convolutional neural network that isolates the diffraction peaks of the incoming images and estimates their parameters. The neural network is trained in Python and converted into synthesizable RTL using HLS4ML (See HLS4ML under Research Projects) and Vivado HLS. More specific data on this process is detailed in

this Medium article by Ryan Forelli.

Current Work

A version of this system has been implemented for the analysis of a single gaussian spot. One

image was able to be processed every 450 to 750 μs with high accuracy. Work is now being

done to quantize and improve the accuracy of the convolutional neural network, as well as

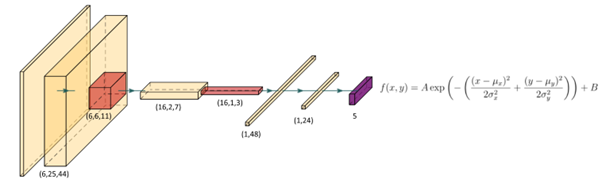

implement the capability to analyze more than one spot at a time. As shown in the example

below, RHEED produces a diffraction pattern with many spots.

RHEED Example

To allow the system to analyze multiple spots at the same time, a custom Keras and HLS4ML

layer was created to efficiently crop regions of interest from input images based on user-inputted

coordinates. These images will be fed serially into the convolutional neural network to save

resource space on the FPGA.

Structure of the neural network

Future Work

Possible future additions to this project include:

● Using a YOLO-style neural network to pass the region of interest coordinates to the

custom crop layer to reduce human input to the system.

● Training a single convolutional neural network to classify multiple spots at the same time

using the custom crop layer.

● Providing control feedback to the RHEED system using the results of the spot analysis.

Collaboration

This work is done in collaboration with

Prof. Josh Agar from Drexel University.

Researchers: Abdelrahman Elabd, Pujan Patel, Matthew Wilkinson.